02.06.08

VanDev talk summary

This is a somewhat expanded version the talk I’m giving to the Vancouver Software Developers’ Network tomorrow. I don’t think there’s is much that I haven’t said in previous blog postings, but I wanted to gather it all in one place.

“What is the variability between programmers?” is question I was curious about when I started my MS CS at UBC. In Silicon Valley, I’d heard a rule of thumb that there was a 100-to-1 difference in programmer productivity. My husband had heard ten-to-one; Joel on Software quotes one of his old profs to suggest that it is ten-to-one or larger.

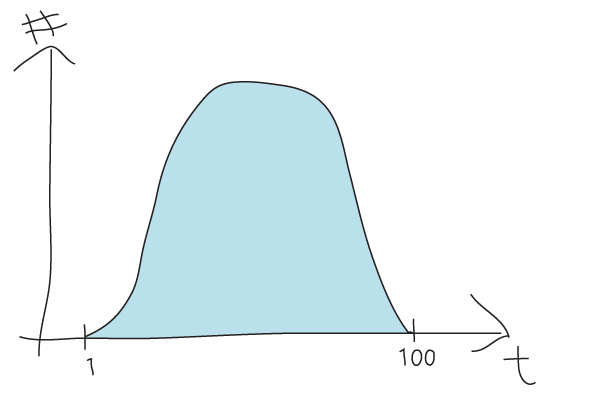

Here is a drawing (deliberately crude, so nobody would think it represents actual data) of what I thought the histogram would look like of the number of programmers that finish a task in a certain time on the y-axis, and the time it takes on the x-axis.

The first problem here is that this just measures time, not quality. It is presumably faster to write lousy code than to write code that is clean, easy to read, easy to maintain, etc. That is a legitimate issue, but unfortunately my reading of peer-reviewed papers has not convinced me that anybody really knows how to measure quality.

There are some people who have measured coding speed, however. I have reported previously on experiments by Demarco and Lister, Dickey, Sachman, Curtis, and Ko which measure the time for a number of programmers to do a task. What I found is that the time histogram curve actually looks like this:

Observations:

- The worst programmer isn’t 100 or 10 times slower than the best, the worst programmer — found at the end of a very long tail — is infinitely worse. If you think about it, I am going to be infinitely faser than a walrus. It is hard to program with those flippers. (I actually worked on a project with a contractor who, after a year, was let go because despite repeated requests, he had not checked in a single line of his code. I think that counts as infinitely slow.)

- The median programmer is about two to four times slower than the fastest on single tasks. (Because of regression to the mean, this advantage should get smaller with many tasks.)

- The curve is wickedly shifted to the left. This makes sense: there isn’t much you can do to get faster, but a LOT of things you can do to get slower. (Not ever check in your code, for example.)

What implications does this curve have?

- Don’t spend a lot of effort to hiring the absolute best; spend lots of effort to avoid hiring losers.

- Don’t spend a lot of effort to learning how to type faster; spend lots of effort to figure out how to avoid getting stuck.

“Don’t get stuck” is easier said than done, of course, but there are things you can do.

- When you have a question — e.g. “Why is Foo set to 3 instead of 5?” — write down three hypotheses for what the answer might be. This can help you avoid confirmation bias. I came up with this idea after reading that breadth-first-ish approaches to problems are more successful than more depth-first-ish searches. I don’t have any research on it, but writing three hypotheses helps me a lot.

- Explain your question/problem to someone. It doesn’t even need to be someone who knows anything about coding. While there is little academic research on verbalizing, there are lots of anecdotes on it being helpful to verbalize. Anecdotes say that “rubber ducking” is useful, and that has been my experience as well. Verbalizing might also be part of why I find writing down three hypotheses so useful.

- Ask for help! Someone familiar with the particular area that you are investigating might be harder to find than a rubber duck, but sometimes can be more useful.

- Note to managers: your new hires will probably feel uncomfortable interrupting you to ask for help. Instead of making them come interrupt you, go to them. Once or twice per day, stop by their office and spend some time with them. Ask to see what they are doing; pair program with them for a little while. When they are new, you are more likely to catch them being stuck than not being stuck, so you can proactively un-stick them. Even if they are not stuck, you can still probably give good pointers on tools and techniques.

- Use tools to help you find the answers to your questions! There are all kinds of great tools available now that can help you answer questions.

- Omniscient debuggers: Debuggers like odb and undoDB keep track of every variable’s state change and then let you trace backwards to where that variable changed state. (Note: Cisco also made an Eclipse plugin for omniscient debugging in C++, but for internal use only.)

- Many code coverage tool will also color lines based on whether they were executed or not. This is a cheap way to see which execution paths were taken! Examples include Visual Studio, the Intel C++ Code Coverage Tool, and the Eclipse plug-in EclEmma.

- One of the questions that I frequently ask is, “How do I get information from class Foo over to class Bar?” Prospector and Strathcona can help with that. Strathcona looks for examples of existing code in your code base that gets you from Foo to Bar; Prospector looks for existing code, and also traverses the tree of classes that can be reached from a given class to answer that question.

- Use tools to keep you from having to get stuck in the first place.

- Findbugs looks for code that “looks funny” and which is likely to have errors in it.

- JML allows the user to specify all kinds of “contracts” about how a method will work — preconditions, post-conditions, invariants, etc — in a very rich way. If anybody breaks those contracts (e.g. by passing illegal arguments), it gets flagged. It sounds like it would be really tedious to generate all those promises, but the tool Daikon can help. Daikon can generate promises based on actual run data; if something changes to violate the promises, it will flag it. (The contracts also work as extra documentation.)

ducky said,

March 26, 2008 at 4:00 pm

Another omniscient debugger: TOD. http://pleiad.dcc.uchile.cl/tod/

ducky said,

June 8, 2008 at 10:50 am

I stumbled across a very early reference to a desired Python omniscient debugger by Michael Salib, Insecticide:

http://web.mit.edu/msalib/www/writings/talks/Europython2004/lightningTalk.pdf

I’ve also heard that Erlang, because it has no side effects, allows you to step forwards and backwards in time.

is it illegal to use an academic version of software said,

June 19, 2008 at 2:14 pm

[…] I haven??t said in previous blog postings, but I wanted to gather it all in one place. ???What ishttp://www.webfoot.com/blog/2008/02/06/vandev-talk-summary/In the papers 16 June Electric News via Yahoo! UK & Ireland NewsCisco predicts surge in online video […]

Best Webfoot Forward » My MS thesis is done! said,

July 24, 2008 at 5:45 pm

[…] measures. I found some, talked about them on my blog, and summarized them in the first part of my VanDev talk. The big take-away for programmers is “Don’t get stuck!” (Note: this part is not […]

BHLog » The wee beginnings of a biohaskell tutorial? — and some thoughts on programming productivity said,

August 14, 2008 at 4:46 am

[…] between the worst and best programmer average. And, disregarding the span from worst to best, the shape of the distribution has certain ramifications, […]

Best Webfoot Forward » programmer productivity update said,

November 3, 2008 at 10:17 am

[…] between kinda-normal programmers is about 2. It’s nice to find supporting evidence for what I’d reported earlier. Here’s the money graph: Time to complete a task probability […]

Best Webfoot Forward » Gender and programming said,

May 20, 2009 at 11:45 am

[…] note thatbecause there is such asymmetry in task completion time between above-median and below-median, you might expect that a bunch of median programmers are, in the aggregate, more productive than a […]

Pedro said,

April 3, 2012 at 7:45 am

Hi!

Again I would suggest you look at some algorithmic programming contests. I think that for a specific task, the distribution of time-to-completion may be the one described but if you look at harder tasks the distribution will tend to “spread”. That is, the “median” programmer will take a lot longer than the best one, which can mean, for a sufficiently hard task, that he or she is not able to complete it in a reasonable time frame.